Resume a Pipeline

-

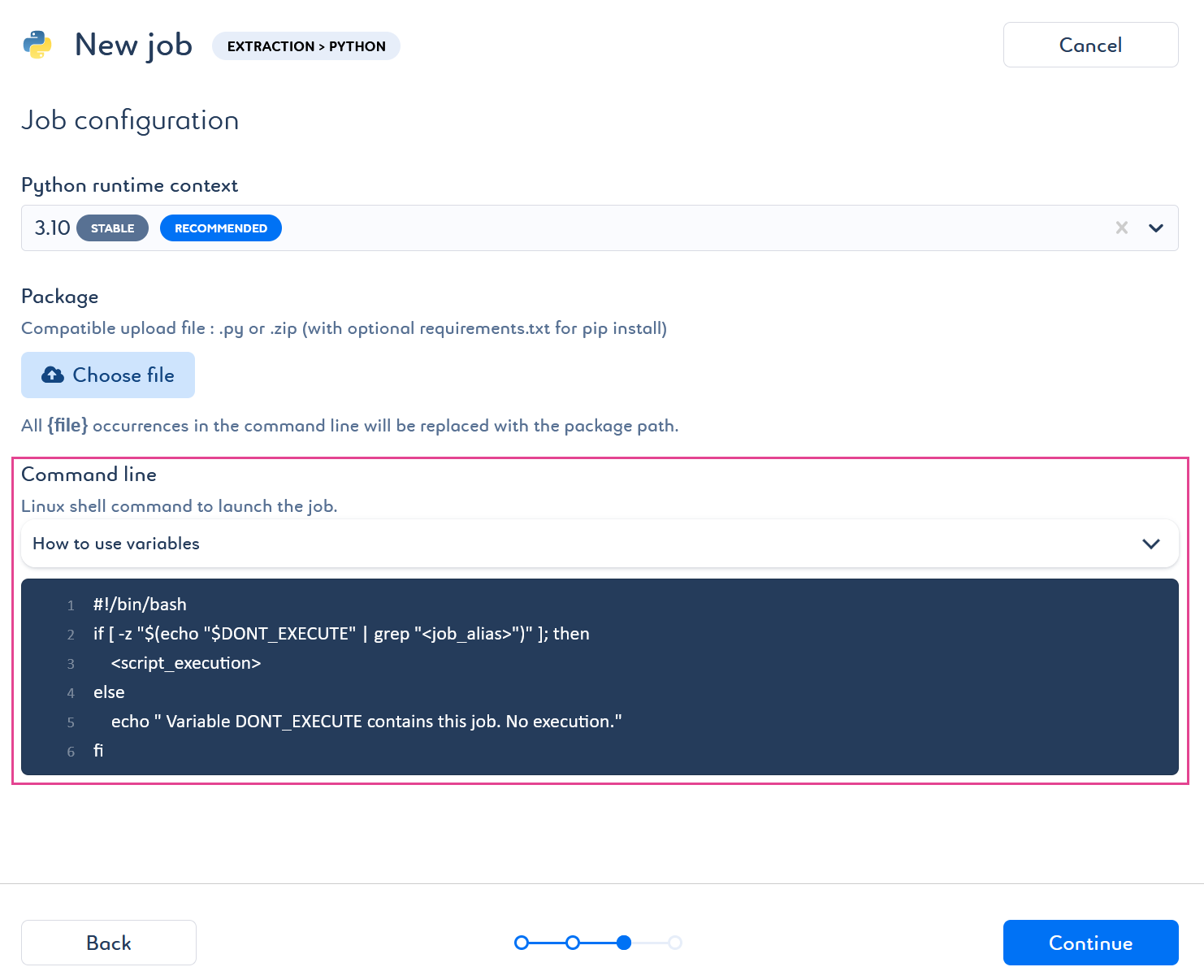

Create new jobs for your pipeline or use existing jobs, knowing that each job must contain the following script in its command line:

#!/bin/bash if [ -z "$(echo "$DONT_EXECUTE" | grep "<job_alias>")" ]; then <script_execution> else echo " Variable DONT_EXECUTE contains this job. No execution." fiWhere

-

<script_execution>must be replaced with the job execution script, that is, the name of the code file uploaded in the package of the job when it was created. -

<job_alias>must be replaced with the alias of the job. You can find it in the jobOverview page.

You can modify the command line of existing jobs by upgrading them.

-

-

Create a new pipeline or use an existing one.

If you use an existing pipeline, check that the jobs have the required script in their command line, as said in the previous step. -

Run your pipeline.

-

According to the result create the

$DONT_EXECUTEpipeline environment variable with the list of job aliases that you do not want to be run.You can modify this variable as many times as required. It is up to you to update its value according to the results of your pipeline. You do not need to create a new variable every time you run your pipeline. However, if you have several pipelines, you do need to create an environment variable at the corresponding pipeline level for each pipeline. This selective execution avoids the need to rerun one or more jobs, and thus the entire pipeline.

|

There are several solutions that can be considered to automate this process.

|