Copy a File From HDFS to the Local Computer

To follow this procedure, you must have a Repository containing the information of the Saagie platform. To this end, create a context group and define the contexts and variables required.

In this case, define the following context variables:

| Name | Type | Value |

|---|---|---|

|

String |

Your HDFS IP address |

|

String |

Your HDFS port |

|

String |

The folder to be selected in the HDFS |

|

String |

The file name to be selected from HDFS |

|

Directory |

The local directory to store the files get from HDFS |

|

String |

The name for the retrieve file |

|

String |

The HDFS user authentication name |

Once your context group is created and stored in the Repository, you can apply the Repository context variables to your jobs.

| For more information, read the whole section on Using contexts and variables on Talend Studio User Guide. |

-

Create a new job in Talend.

-

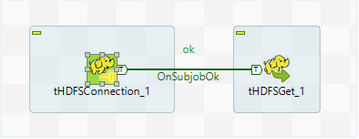

Add the following components:

-

tHDFSConnection, to establish an HDFS connection reusable by other HDFS components. -

tHDFSGet, to copy files from HDFS in a user-defined directory.

-

-

Link these components with the

OnSubjobOkconnection.

-

Double-click each component and configure their settings as follows:

In the Basic settings tab:

-

From the Property type list, select Built-in so that no property data is stored centrally.

-

From the Distribution list, select the Cloudera cluster.

-

From the Version list, select the latest version of Cloudera.

-

In the NameNode URI field, enter the URI of the Hadoop NameNode, the master node of a Hadoop system. It must respect the following pattern:

hdfs://ip_hdfs:port_hdfs. Use context variables if possible:"hdfs://"+context.IP_HDFS+":"+context.Port_HDFS+"/" -

In the Username field, enter the HDFS user authentication name.

-

Enter the following Hadoop properties:

Property Value "dfs.nameservices""nameservice1""dfs.ha.namenodes.cluster""nn1,nn2""dfs.client.failover.proxy.provider.cluster""org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider""dfs.namenode.rpc-address.cluster.nn1""nn1:8020""dfs.namenode.rpc-address.cluster.nn2""nn2:8020" -

Disable the Use datanode hostname option.

For more information, you can refer to Talend’s documentation on the tHDFSConnectioncomponent.In the Basic settings tab:

-

From the Property type list, select Built-in so that no property data is stored centrally.

-

Select the Use an existing connection option.

-

From the Component List list, select the connection component

tHDFSConnectionto reuse the connection details already defined. -

In the HDFS directory field, browse to, or enter the path pointing to the data to be used in the file system.

-

In the Local directory field, browse to, or enter the local directory to store the files get from HDFS.

-

In the Files field, enter a File mask, the file name to be selected from HDFS. You can enter a New name for the retrieve file if required.

For more information, you can refer to Talend’s documentation on the tHDFSGetcomponent. -

-

Run the job.

-

Read a file with HDFS with Talend (GitHub page)